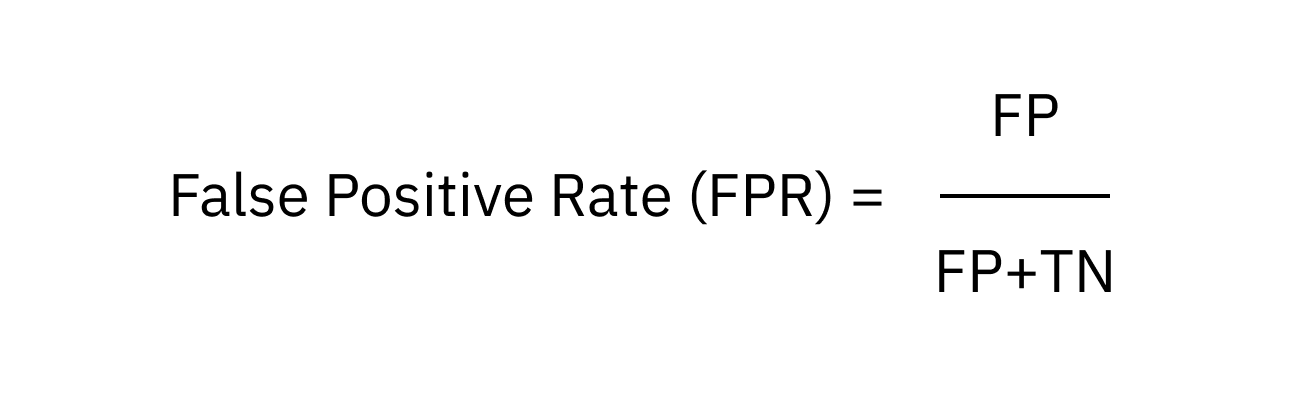

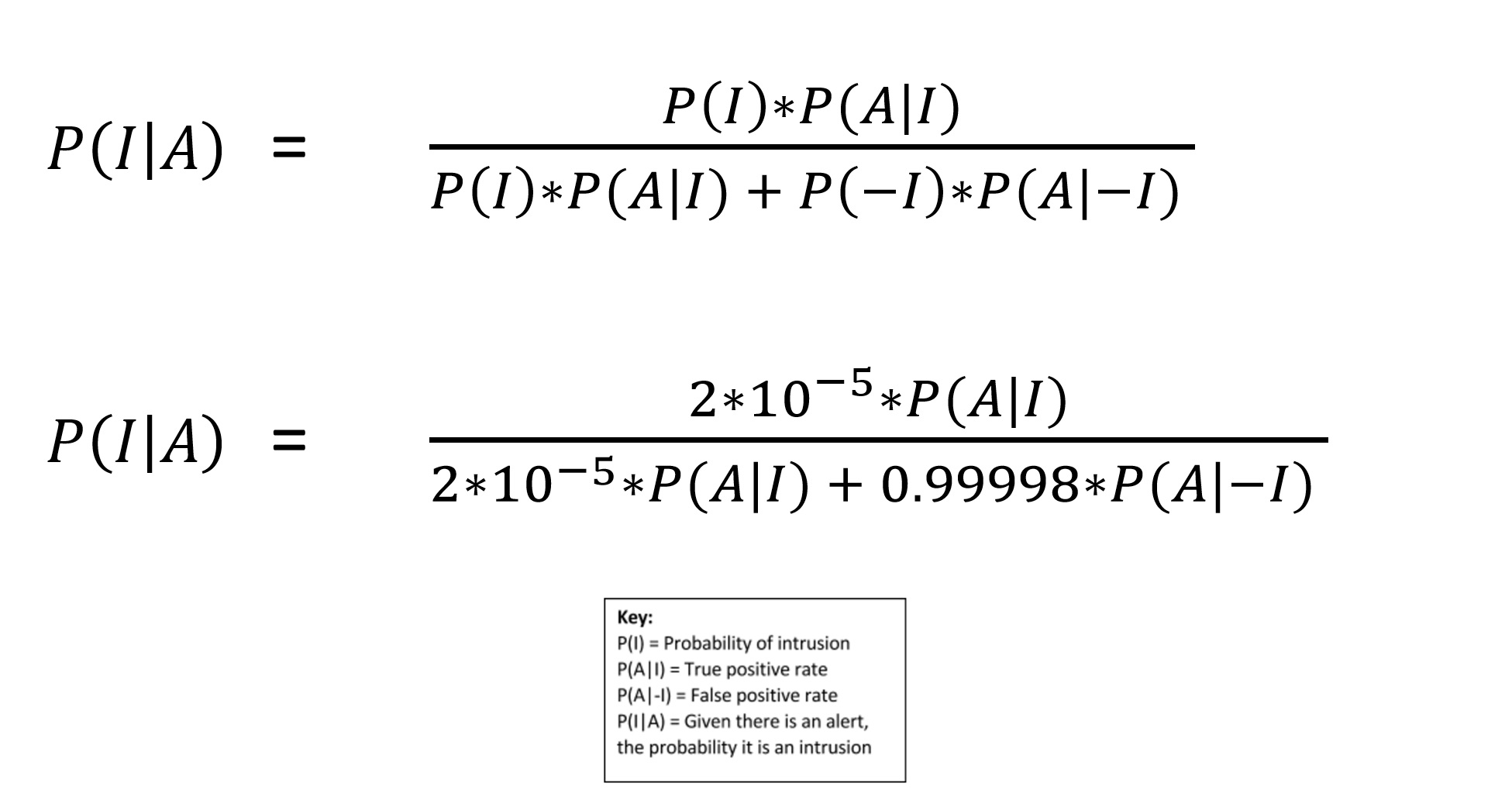

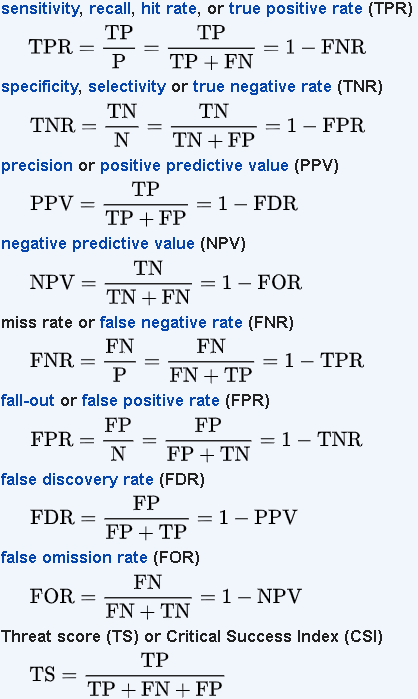

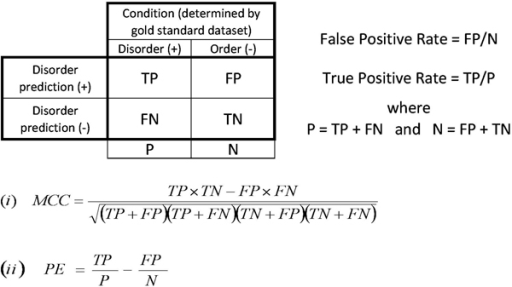

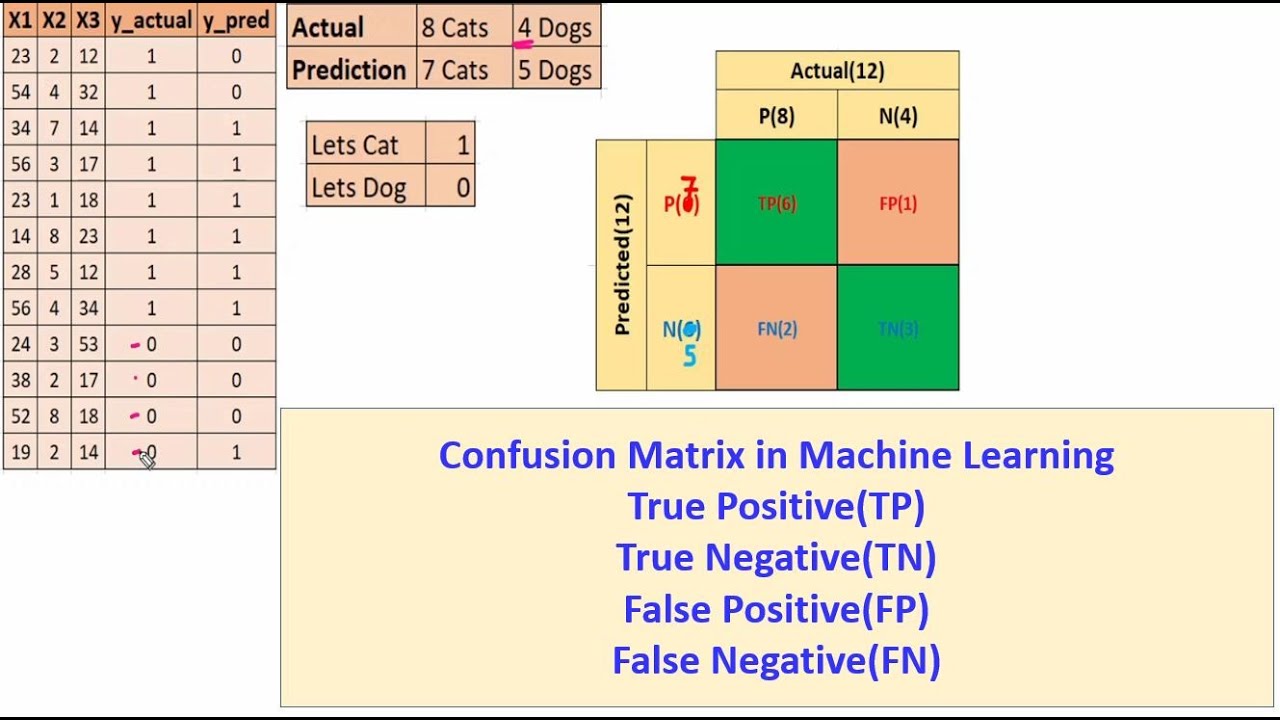

False Positive Rate Formula What you have is therefore probably a true positive rate and a false negative rate The distinction matters because it emphasizes that both numbers have a numerator and a denominator Where things get a bit confusing is that you can find several definitions of false positive rate and false negative rate with different denominators

The false positive rate or fall out is defined as text Fall out frac FP FP TN In my data a given image may have many objects So almost every image has at least one box I am counting a predicted box as a true positive if its IOU with a truth box is above a certain threshold and as a false positive otherwise FPR False Positive Rate FNR False Negative Rate FAR False Acceptance Rate FRR False Rejection Rate

False Positive Rate Formula

False Positive Rate Formula

https://assets-global.website-files.com/61ead44637176294fdd344ff/642c36bef7d838ca76c2de0d_false-positive-rate-formula.png

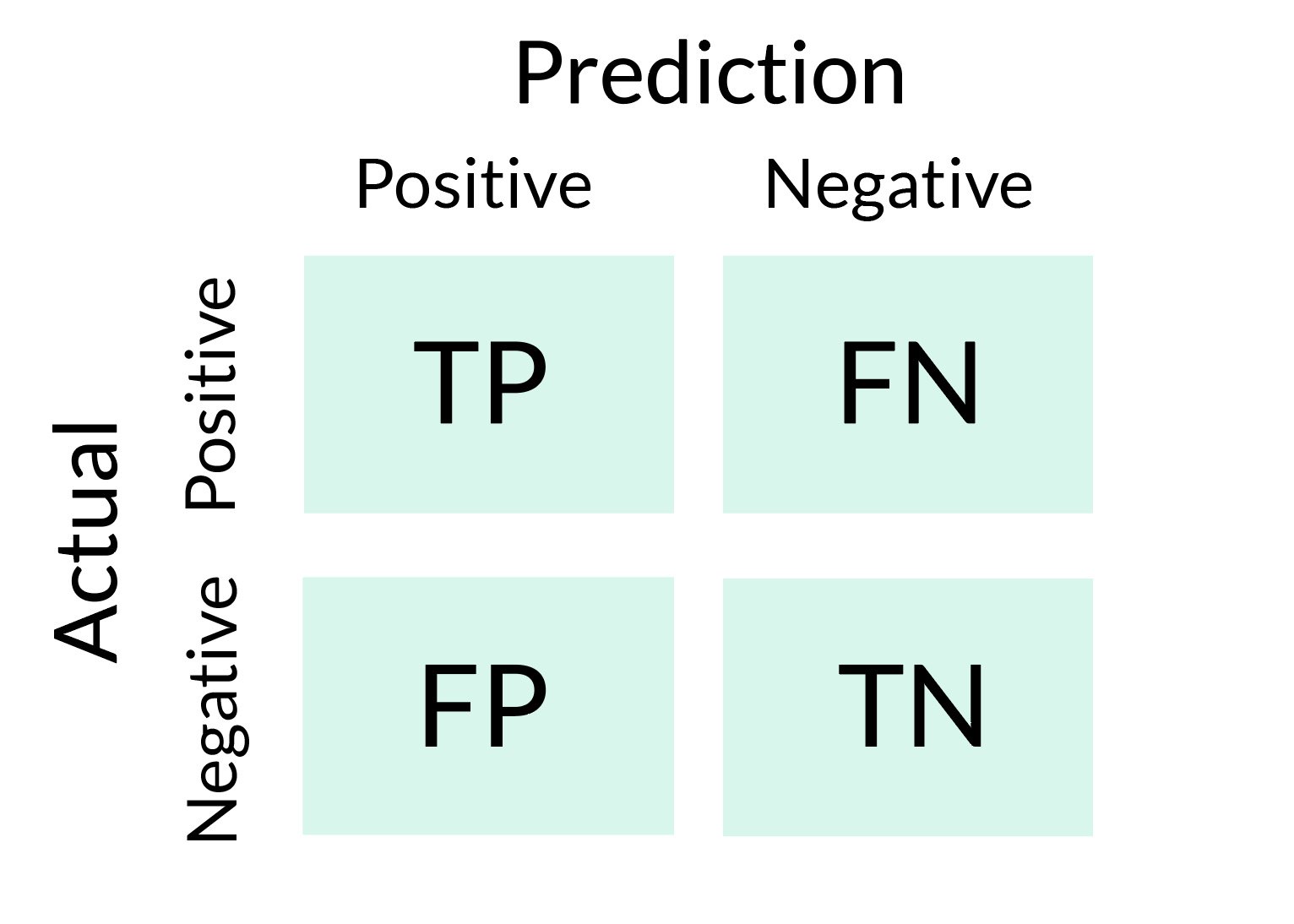

Confusion Matrix And Performance Equations The Confusion Matrix

https://www.researchgate.net/publication/340034692/figure/fig1/AS:870930318385152@1584657377357/Confusion-matrix-and-performance-equations-The-confusion-matrix-included-four.png

What Is False Positive Rate

https://www.iguazio.com/wp-content/uploads/2023/06/Screenshot-2023-06-19-at-15.26.56.png

Can Precision and Recall be used to Generate TPR or FPR In other words is there any formula that relates the following Evaluation metrics True Positive Rate TPR with either Precision or Recall e g TPR 1 Precision Recall False Positive Rate FPR with Precision or Recall e g FPR Recall Precision 2 Recall I would like to look at the size of the expected false positive and false negative rates in employment hiring decisions Let s assume that it is useful to dichotomize job performance after hiring The hiring decisions are based on a predictor with a

Where Prevalence is the ratio of positive conditions number of positives in your test set to the total population You should have the number of positive conditions in your test data so that you may get the f1 score Note that the f1 Actually you are calculating the false positive rate FPR instead of the false positive it seems that wiki example uses this term in a non rigorous way Usually FP represents false positive TN represents true negative and false positive rate

More picture related to False Positive Rate Formula

Image Result For False Positives And Negatives False Positive

https://i.pinimg.com/originals/18/7f/82/187f82e15145fdce5e09059eebc92b34.png

Classification Metrics Classification Metrics And Confusion By

https://miro.medium.com/max/974/1*H_XIN0mknyo0Maw4pKdQhw.png

Evaluating Classification Models A Guided Walkthrough Using Sci Kit

https://miro.medium.com/max/1400/0*T5Klv1yPgZFHkJfe

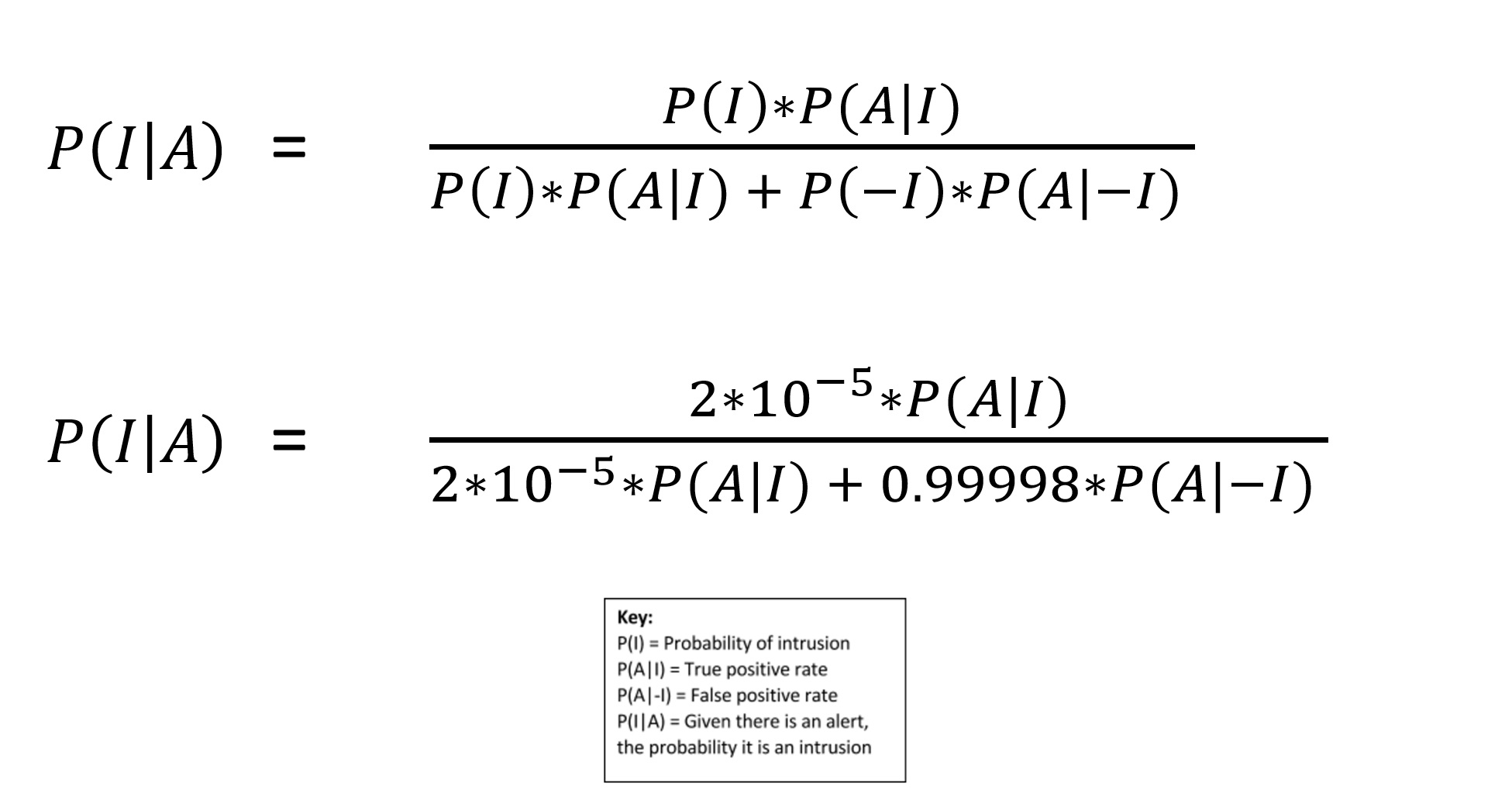

Failure detection rate FDR and false alarm rate FAR are used in anomaly detection and failure detection domains to evaluate the classification model performance However I don t see any clear definition of failure detection rate FDR and false alarm rate FAR so that we can relate these with precision and recall Many if not most people have the intuition that a test that s 99 accurate implies a false positive rate of 1 regardless of the number of true positives in the population and the population size It is counter intuitive to many people that a highly accurate test can give you way more false positives than true positives in some circumstances

[desc-10] [desc-11]

Suppressing False Alerts In Data Security O Reilly

https://www.oreilly.com/content/wp-content/uploads/sites/2/2020/01/ram_f3-d31d6d2b660c56e0aac65d752fa9898b.jpg

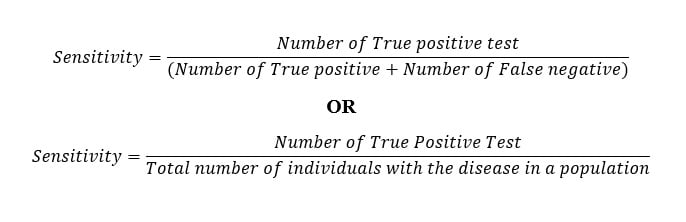

What Is Sensitivity Specificity False Positive False Negative

https://microbenotes.com/wp-content/uploads/2020/04/Sensitivity-Formula.jpg

https://stats.stackexchange.com › questions

What you have is therefore probably a true positive rate and a false negative rate The distinction matters because it emphasizes that both numbers have a numerator and a denominator Where things get a bit confusing is that you can find several definitions of false positive rate and false negative rate with different denominators

https://stats.stackexchange.com › questions › how-can-i-calculate-the-fa…

The false positive rate or fall out is defined as text Fall out frac FP FP TN In my data a given image may have many objects So almost every image has at least one box I am counting a predicted box as a true positive if its IOU with a truth box is above a certain threshold and as a false positive otherwise

Understanding The ROC Curve In Three Visual Steps By Valeria Cortez

Suppressing False Alerts In Data Security O Reilly

False Positives negatives And Bayes Rule For COVID 19 Testing By

Contingency Table And Common Performance Measurements Open i

Calculating False Positive False Negative Probabilities Using Bayes

How To Solve false Positive Probability Problems YouTube

How To Solve false Positive Probability Problems YouTube

Testing And Screening Stats Medbullets Step 1

Confusion Matrix True Positive TP True Negative TN False

References Sensitivity Vs Specificity Vs Recall Cross Validated

False Positive Rate Formula - [desc-13]