F Beta Score begingroup I completely share your concerns about proper scoring rules but it is possible to derive quadratic errors analogously to Brier s score that capture certain aspects of predictive behaviour like sensitivity specificity or the predictive values and in consequence also an analogoue to the F measures

The formula for F measure F1 with beta 1 is the same as the formula giving the equivalent resistance composed of two resistances placed in parallel in physics forgetting about the factor 2 This could give you a possible interpretation and you can think about both electronic or thermal resistances Wikipedia defines F1 Score or F Score as the harmonic mean of precision and recall But aren t Precision and Recall found only when the result of predicted values of a logistic regression for example is transformed to binary using a cutoff Now by cutoff I remember what is the connection between F1 Score and Optimal Threshold

F Beta Score

F Beta Score

https://media.licdn.com/dms/image/v2/C5622AQF-udoYzmH9aQ/feedshare-shrink_800/feedshare-shrink_800/0/1645527173286?e=2147483647&v=beta&t=taCmpLD0lDwWVPtVsNI6mjeu5ayCOhEAi5R-mjz5LQk

Understanding The F1 Score Metric For Evaluating Grammar Error

https://praful932.dev/assets/images/blog-3-f1-score-gec/f_beta.png

F1 Score In Machine Learning Intro Calculation

https://cdn.prod.website-files.com/5d7b77b063a9066d83e1209c/639c3d0f93545970016d3dc7_f-beta-eqn.webp

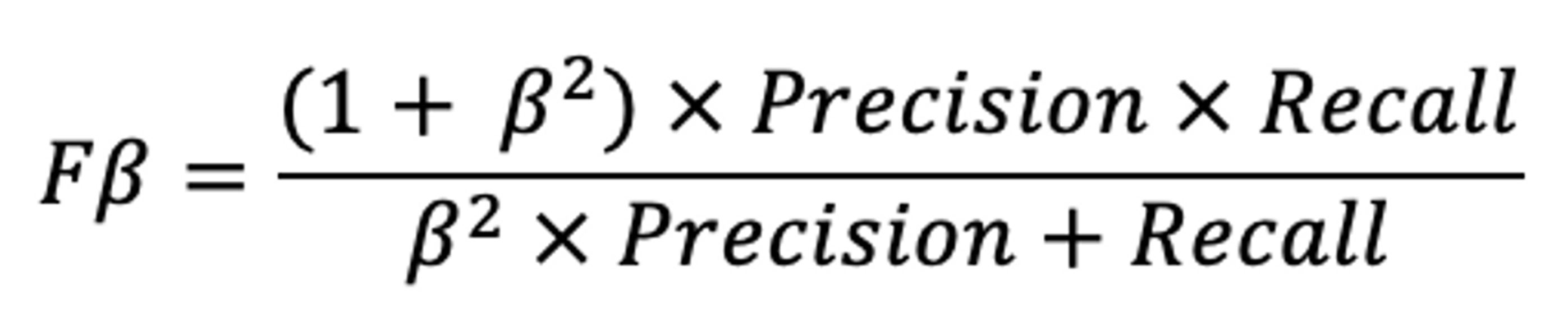

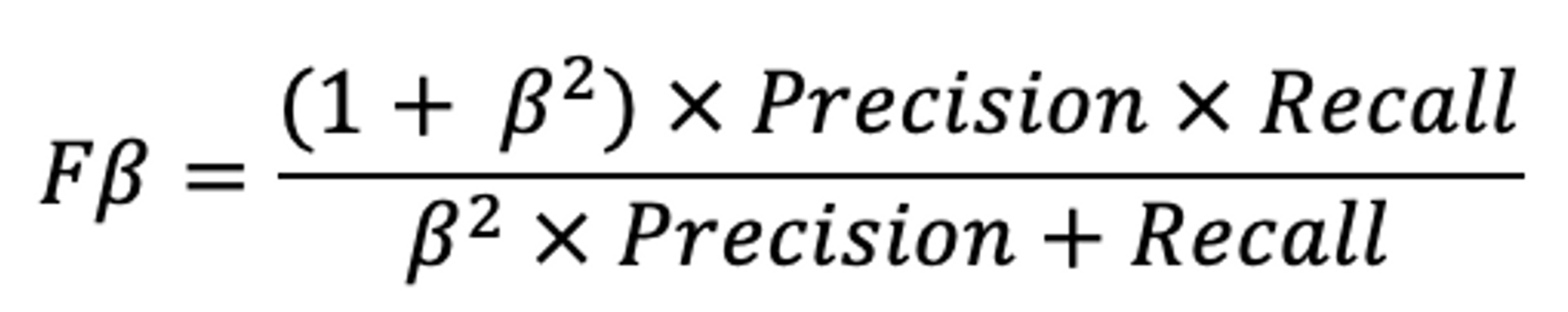

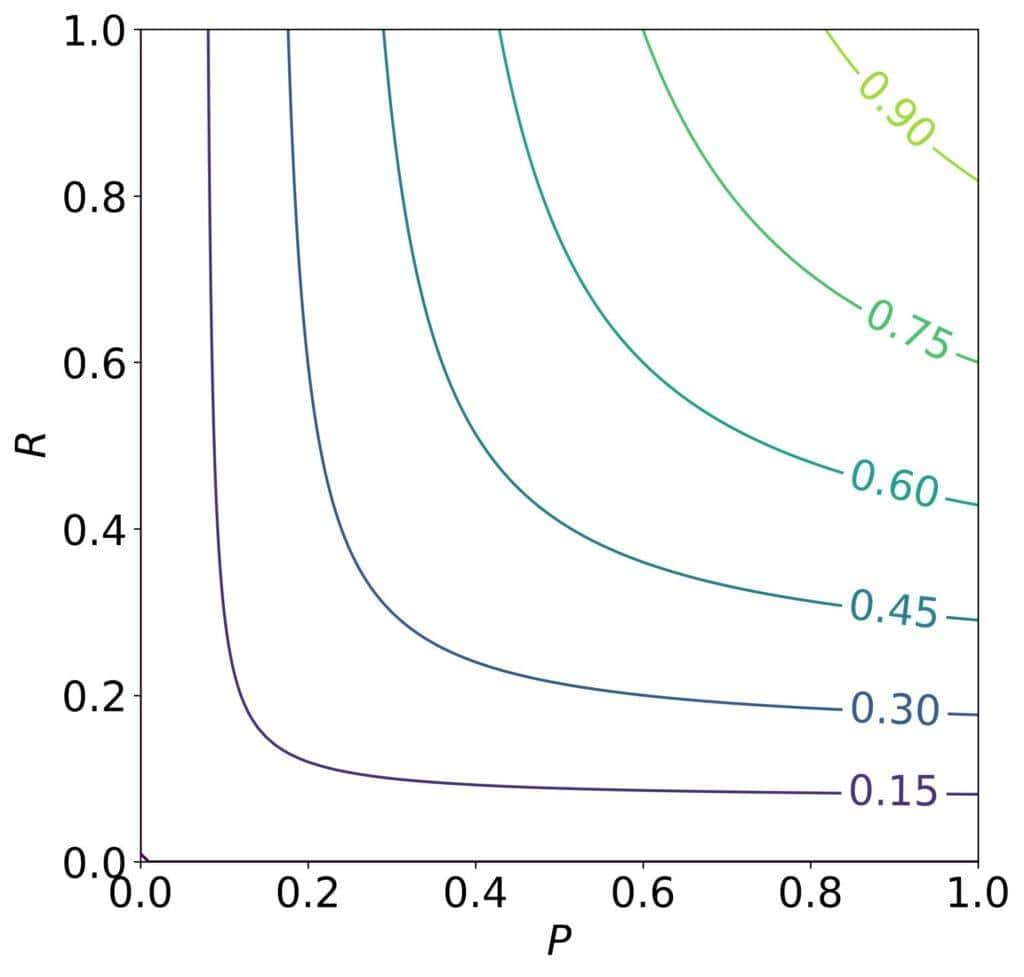

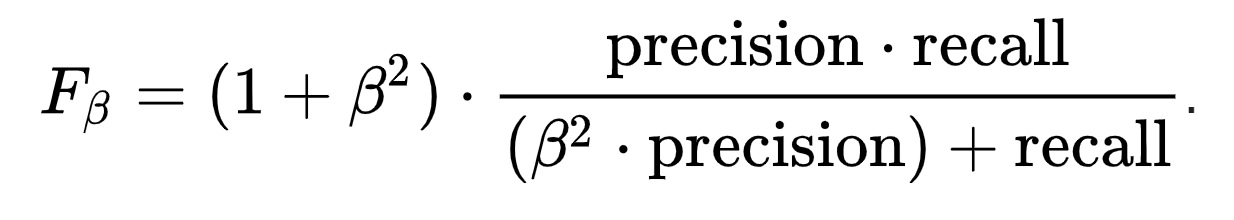

F beta score s formula calculates like this F beta 1 beta 2 frac PR beta 2P R However according to some sources in case I want to add more emphasis to Precision I should use beta 1 and complementary in case I want to add less emphasis to Precision than Recall I should use beta 1 I m using sklearn s confusion matrix and classification report methods to compute the confusion matrix and F1 Score of a simple multiclass classification project I m doing For some classes the F1 Score that I m getting is higher than the accuracy and this seems strange to me Is it possible or am I doing something wrong

I am comparing a ML classifier to a bunch of other benchmark F1 classifiers by F1 scores By AUPRC my classifier does worse than other benchmark methods When I compared F1 score however I got a The Brier score is a function of probabilistic classifications and the confusion matrix is can be a function of these probabilistic classifications plus a threshold Thus the Brier or log score is not just a function of the confusion matrix or of accuracy

More picture related to F Beta Score

F Beta Score Model Building And Validation YouTube

https://i.ytimg.com/vi/Clo-t9eeEwg/maxresdefault.jpg

Classification Explanation Of The F Beta Formula Data Science Stack

https://i.stack.imgur.com/swW0x.png

F beta Score The Correlation

https://i0.wp.com/thecorrelation.in/wp-content/uploads/2022/06/fb1-f7d4221a.png?ssl=1

begingroup what happens when beta goes past one like 2 making it F2 score or more will more weight be given to precision endgroup Naveen Reddy Marthala Commented Aug 28 2020 at 7 32 I actually think that AUPRC is a good way to go it essentially measures precision as a function of recall at varying thresholds but since you ve mentioned that already there s one more thing you can consider the F beta measure

[desc-10] [desc-11]

Confusion Matrix Accuracy Precision Recall F1 Score Yarak001

https://www.oreilly.com/api/v2/epubs/9781789347999/files/assets/220ee307-031d-4fb0-8ea2-1db47e40b27a.png

F beta Score Explained F1 score F0 5 Score And F2 And Where And When

https://i.ytimg.com/vi/pWhQMZOBE-Q/maxresdefault.jpg

https://stats.stackexchange.com/questions/451677/what-are-best-practi…

begingroup I completely share your concerns about proper scoring rules but it is possible to derive quadratic errors analogously to Brier s score that capture certain aspects of predictive behaviour like sensitivity specificity or the predictive values and in consequence also an analogoue to the F measures

https://stats.stackexchange.com/questions/49226

The formula for F measure F1 with beta 1 is the same as the formula giving the equivalent resistance composed of two resistances placed in parallel in physics forgetting about the factor 2 This could give you a possible interpretation and you can think about both electronic or thermal resistances

F Beta Score Baeldung On Computer Science

Confusion Matrix Accuracy Precision Recall F1 Score Yarak001

F1 Score F beta Score YouTube

Calculate F1 And F Beta Score YouTube

Performance Metrics Accuracy Precision Recall And F Beta Score

F beta Score And Categorical Accuracy GRU Download Scientific Diagram

F beta Score And Categorical Accuracy GRU Download Scientific Diagram

F beta Score And Categorical Accuracy LSTM Download Scientific Diagram

Day 61 Performance Metrics Confusion Matrix Accuracy Precision

Confusion Matrix Very Much Useful When We Get Confused By Ramkumar

F Beta Score - [desc-14]