F1 Score Formula Similarly to how L2 can penalize the largest mistakes more than L1 the IoU metric tends to have a squaring effect on the errors relative to the F score So the F score tends to measure something closer to average performance while the IoU score measures something closer to the worst case performance

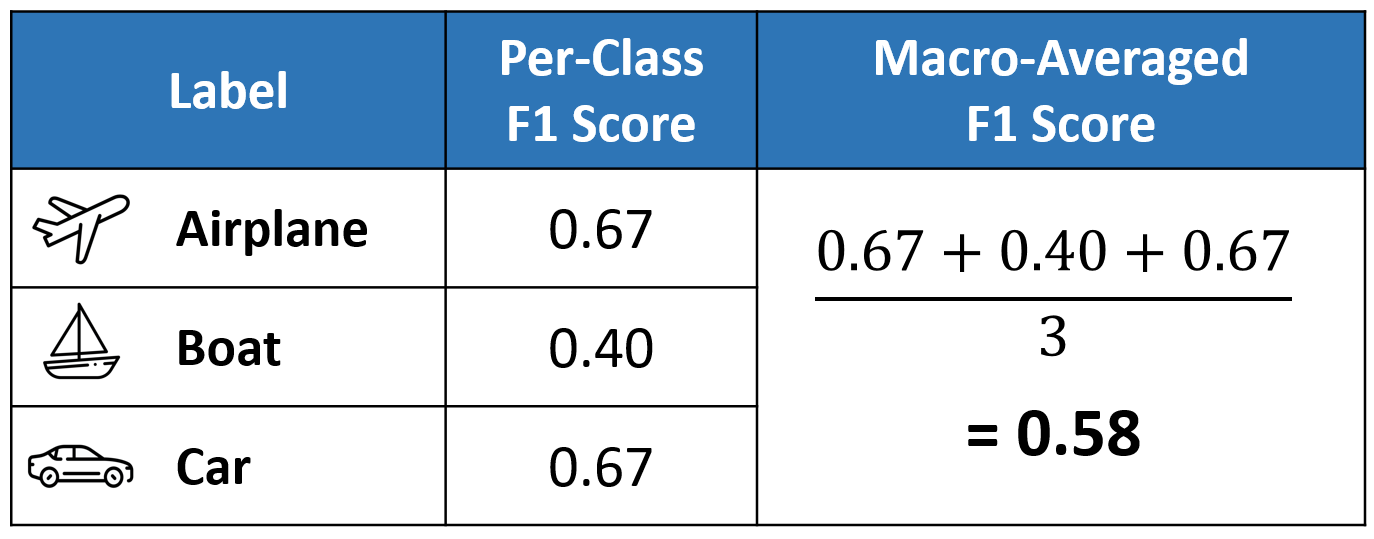

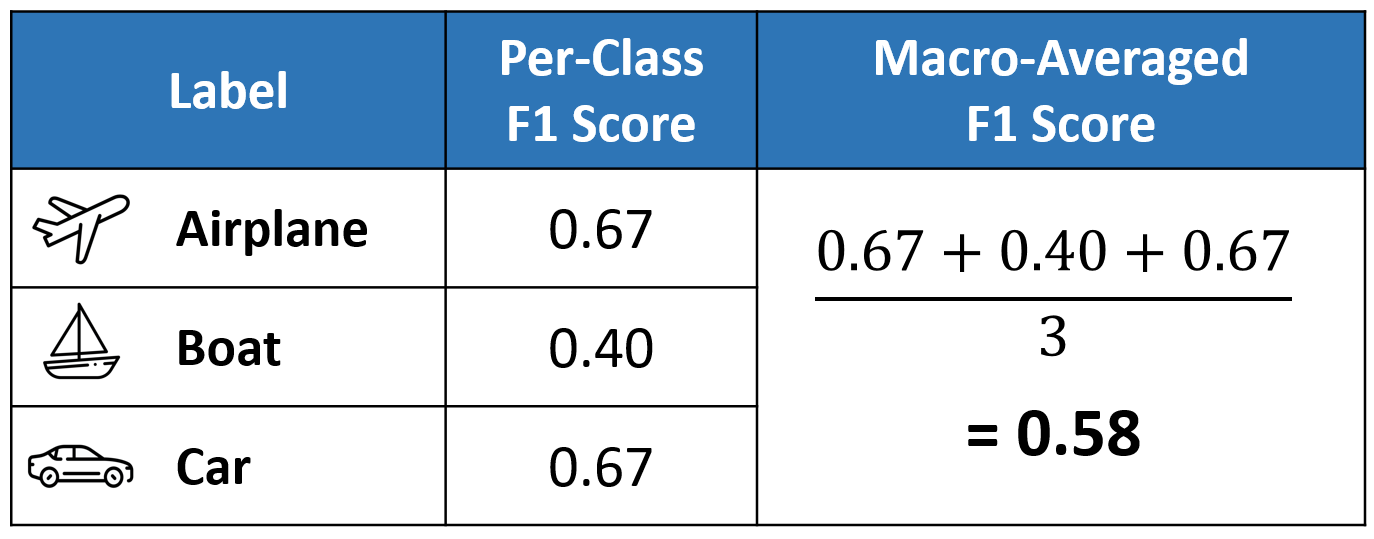

How can I calculate precision and recall so It become easy to calculate F1 score The normal confusion matrix is a 2 x 2 dimension However when it become 3 x 3 I don t know how to calculate precision and recall Under such situation using F1 score could be a better metric And F1 score is a common choice for information retrieval problem and popular in industry settings Here is an well explained example Building ML models is hard Deploying them in

F1 Score Formula

F1 Score Formula

https://i0.wp.com/lifewithdata.com/wp-content/uploads/2022/02/f11.jpeg?resize=798%2C222&ssl=1

F1 Score In ML Intro And Calculation By Isha Medium

https://miro.medium.com/v2/da:true/resize:fit:796/0*85kU6MVJa50De72G

F1 Score

https://velog.velcdn.com/images/jadon/post/f06f1d40-605d-4f13-b6ce-35c220c82968/image.png

You should have the number of positive conditions in your test data so that you may get the f1 score Note that the f1 score does not take the True Negatives into account You may find further information here Confusion Matrix Wikipedia Article I recommend that you read it Hope this helps The Scikit Learn package in Python has two metrics f1 score and fbeta score Each of these has a weighted option where the classwise F1 scores are multiplied by the support i e the number of examples in that class Is there any existing literature on this metric papers publications etc I can t seem to find any

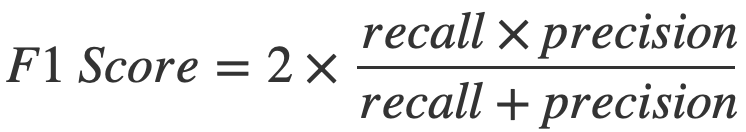

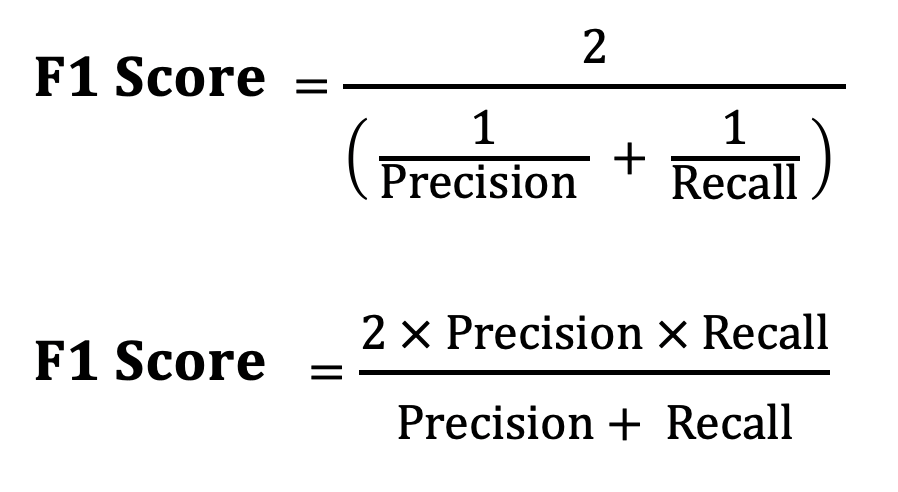

Now let s write the equation for the F1 score F1 2 Precision Recall Precision Recall To prove that the recall value is always greater than or equal to the F1 score we need to show that the numerator of the F1 score equation is always less than or equal to the denominator In other words we need to show that begingroup Specifically it mentions that how you calculate the F1 score is important to consider For example if you take the mean of the F1 scores over all the CV runs you will get a different value than if you add up the tp tn fp fn values first and then calculate the F1 score from the raw data you will get a different and better

More picture related to F1 Score Formula

F1 Score In Machine Learning Intro Calculation

https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/639c3d7b5de7444fb494992e_f1-expression.webp

Understanding The F1 Score When It Comes To Evaluating The By Ellie

https://miro.medium.com/v2/resize:fit:898/1*7tC4-fUHtcffvXGcGTJJtg.png

F1 Score Formula In Machine Learning Bryn Marnia

https://i.ytimg.com/vi/31lQLt24A1g/maxresdefault.jpg

The Dice coefficient also known as the S rensen Dice coefficient and F1 score is defined as two times the area of the intersection of A and B divided by the sum of the areas of A and B Dice 2 A B A B 2 TP 2 TP FP FN TP True Positives FP False Positives FN False Negatives Dice score is a performance metric for image segmentation Unbalanced class but one class if more important that the other For e g in Fraud detection it is more important to correctly label an instance as fraudulent as opposed to labeling the non fraudulent one In this case I would pick the classifier that has a good F1 score only on the important class Recall that the F1 score is available per

[desc-10] [desc-11]

How To Calculate The F1 Score In Machine Learning Shiksha Online

https://images.shiksha.com/mediadata/ugcDocuments/images/wordpressImages/2022_12_MicrosoftTeams-image-96-1-1.jpg

Mean Average Precision mAP Explained Everything You Need To Know

https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/622fd6abcb7cbe45f3a5a494_LnMr1FAXJTIbI2IxksDZTsAETzO-2CardO6RCfkXEF4ymDbEijwC3Wsp6pu98pZ3CsBjf3sMgLbrP5yzg-5Zi-vUsIG3VauOHnWJyZ0ISqcVDOXDHtO_E77EhDC7-L8ky74RtUVy.png

https://stats.stackexchange.com/questions/273537

Similarly to how L2 can penalize the largest mistakes more than L1 the IoU metric tends to have a squaring effect on the errors relative to the F score So the F score tends to measure something closer to average performance while the IoU score measures something closer to the worst case performance

https://stats.stackexchange.com/questions/91044

How can I calculate precision and recall so It become easy to calculate F1 score The normal confusion matrix is a 2 x 2 dimension However when it become 3 x 3 I don t know how to calculate precision and recall

.png?auto=compress,format)

F1 Score Definition Encord

How To Calculate The F1 Score In Machine Learning Shiksha Online

Comment Calculer Le Score F1 En R StackLima

What Is Considered A Good F1 Score Statology

F1 Score In Machine Learning Intro Calculation

Understanding Micro Macro And Weighted Averages For Scikit Learn

Understanding Micro Macro And Weighted Averages For Scikit Learn

Understanding The Accuracy Score Metric s Limitations In The Data

F1 Score In Machine Learning Intro Calculation

How To Calculate The F1 Score In Machine Learning Shiksha Online

F1 Score Formula - begingroup Specifically it mentions that how you calculate the F1 score is important to consider For example if you take the mean of the F1 scores over all the CV runs you will get a different value than if you add up the tp tn fp fn values first and then calculate the F1 score from the raw data you will get a different and better