Keras Learning Rate Decay Adam Keras Plot training validation and test set accuracy Asked 8 years 5 months ago Modified 2 years 6 months ago Viewed 249k times

Change the version of Keras API in tensorflow Asked 5 years 8 months ago Modified 5 years 7 months ago Viewed 13k times 9 Try from tensorflow python import keras with this you can easily change keras dependent code to tensorflow in one line change You can also try from tensorflow contrib

Keras Learning Rate Decay Adam

Keras Learning Rate Decay Adam

https://i.ytimg.com/vi/drcagR2zNpw/maxresdefault.jpg

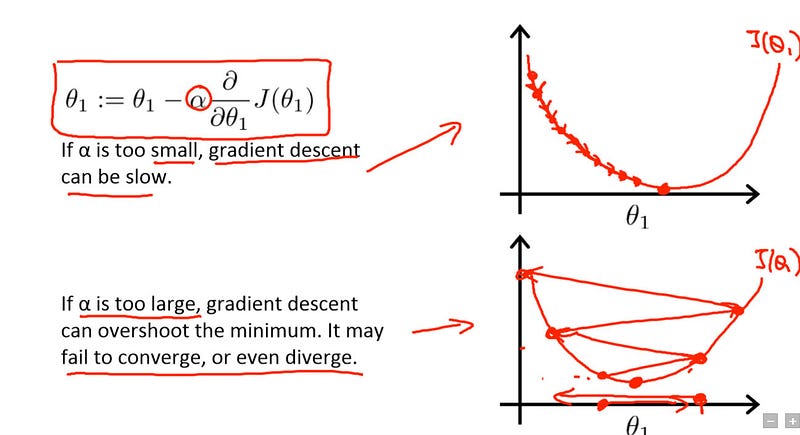

Who s Adam And What s He Optimizing Deep Dive Into Optimizers For

https://i.ytimg.com/vi/MD2fYip6QsQ/maxresdefault.jpg

AdaMax

https://ml-explained.com/articles/adamax-explained/adamax_example.PNG

I am writing the code for building extraction using deep learning but when I am trying to import the library files it is showing the error No module named tensorflow keras I m running a Keras model with a submission deadline of 36 hours if I train my model on the cpu it will take approx 50 hours is there a way to run Keras on gpu I m using

Because tensorflow keras should be removed in TF 2 6 according to TF 2 6 Release Log otherwise from tensorflow import keras tensorflow keras backed by the keras I m trying to change the learning rate of my model after it has been trained with a different learning rate I read here here here and some other places i can t even find

More picture related to Keras Learning Rate Decay Adam

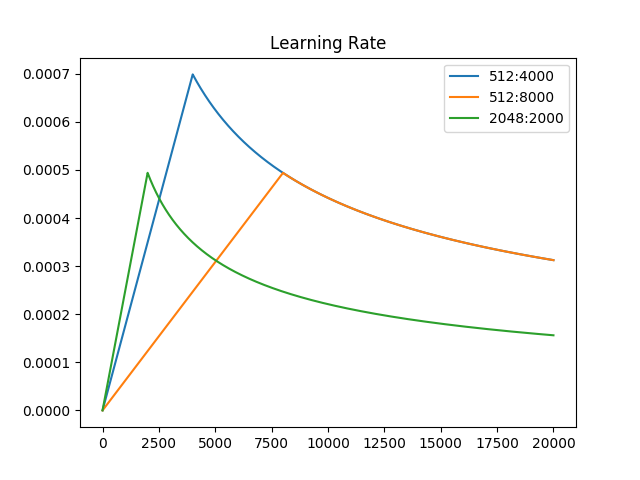

Adam learning rate Decay

https://pic1.zhimg.com/v2-9cda69a36bd0e20c2d0a77288a8fe153_720w.gif?source=172ae18b

Cosine Annealing Explained Papers With Code

https://production-media.paperswithcode.com/methods/Screen_Shot_2020-05-30_at_5.46.29_PM.png

Keras Adam

https://i.stack.imgur.com/0oNft.png

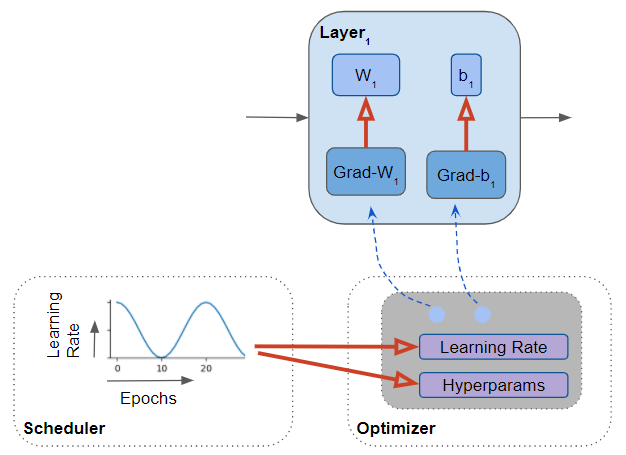

The difference between tf keras and keras is the Tensorflow specific enhancement to the framework keras is an API specification that describes how a Deep Learning framework Keras tf keras API tf keras

[desc-10] [desc-11]

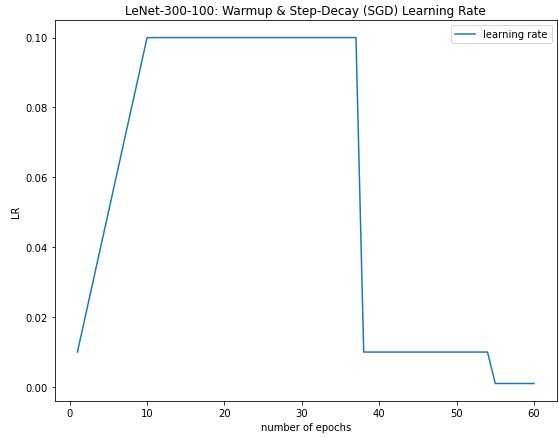

C mo Se Produce Un Calendario De Tasas De Aprendizaje En

https://i.stack.imgur.com/luZjz.png

Intro To Deep Learning

https://cdn-images-1.medium.com/max/800/1*EP8stDFdu_OxZFGimCZRtQ.jpeg

https://stackoverflow.com › questions

Keras Plot training validation and test set accuracy Asked 8 years 5 months ago Modified 2 years 6 months ago Viewed 249k times

https://stackoverflow.com › questions

Change the version of Keras API in tensorflow Asked 5 years 8 months ago Modified 5 years 7 months ago Viewed 13k times

Learning Rate Scheduler PyTorch Forums

C mo Se Produce Un Calendario De Tasas De Aprendizaje En

Learning Rate Cosine Annealing Decay Download Scientific Diagram

Learning Rate Exponential Decay Download Scientific Diagram

Cosine Learning Rate Pytorch

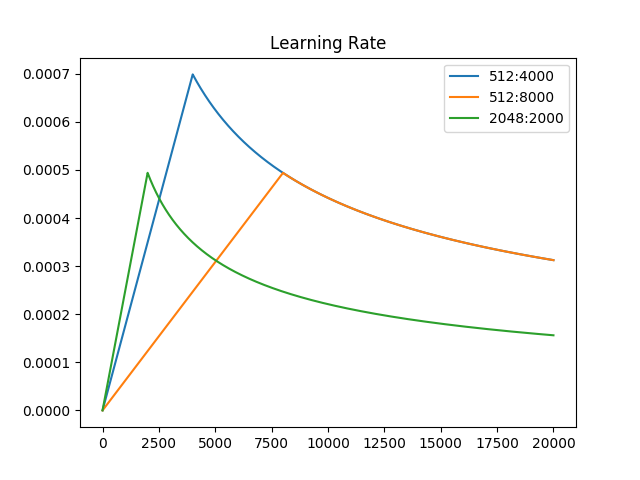

Adam Optimizer With Warm up And Cosine Decay

Adam Optimizer With Warm up And Cosine Decay

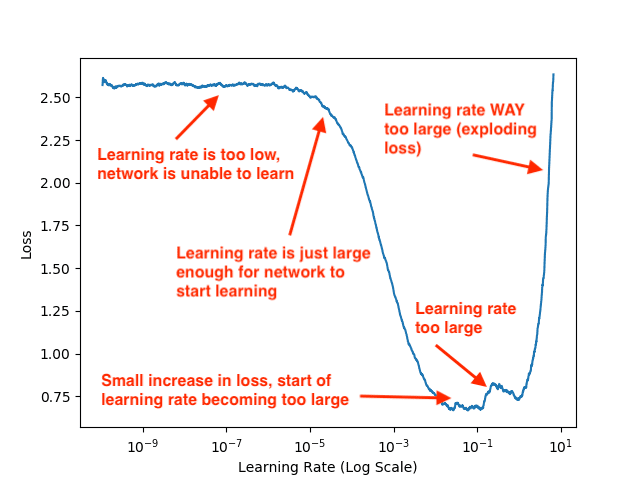

Differential And Adaptive Learning Rates Neural Network Optimizers

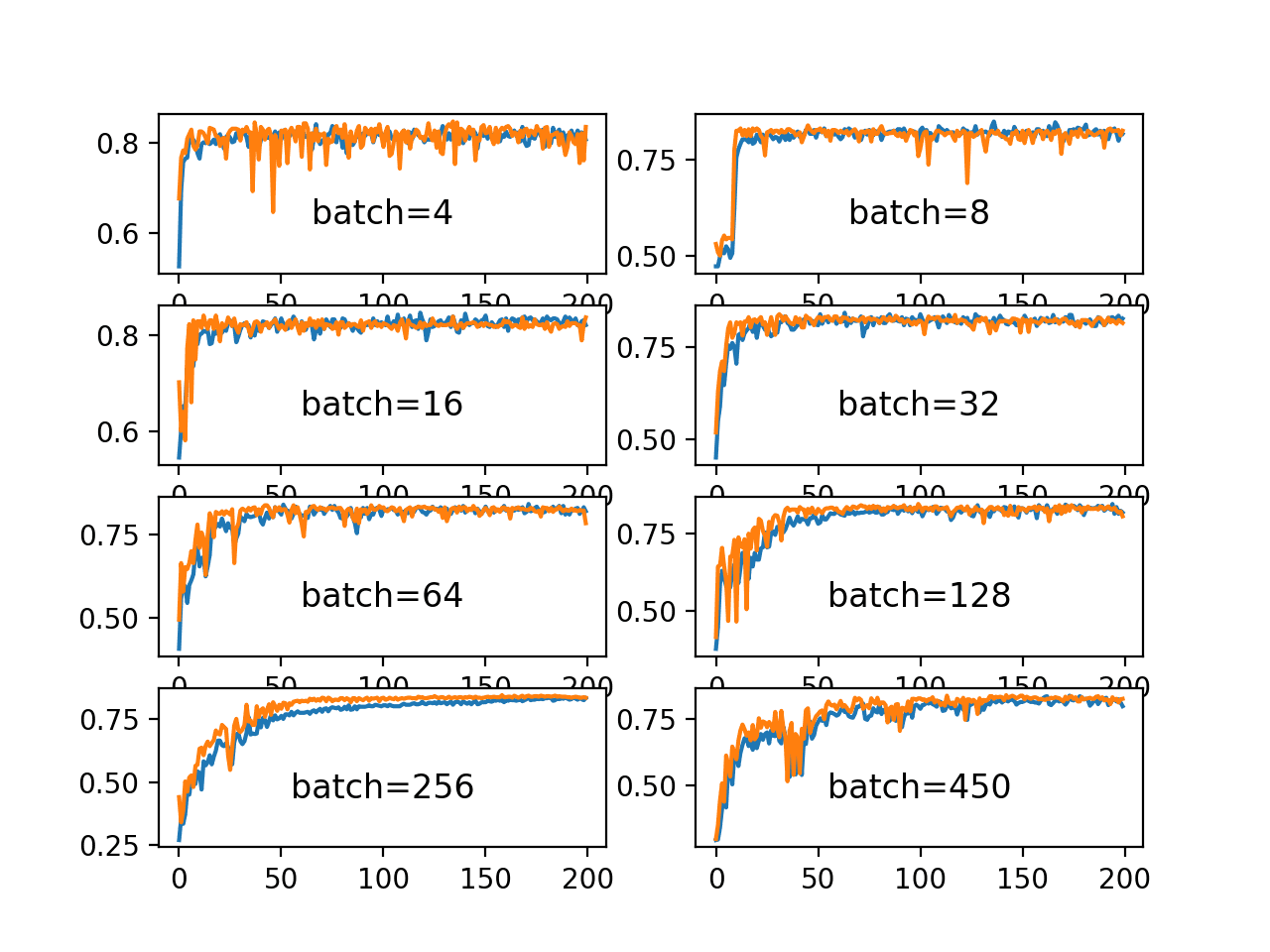

Keras Batch Size And Learning Rate

Keras Batch Size And Learning Rate

Keras Learning Rate Decay Adam - [desc-14]