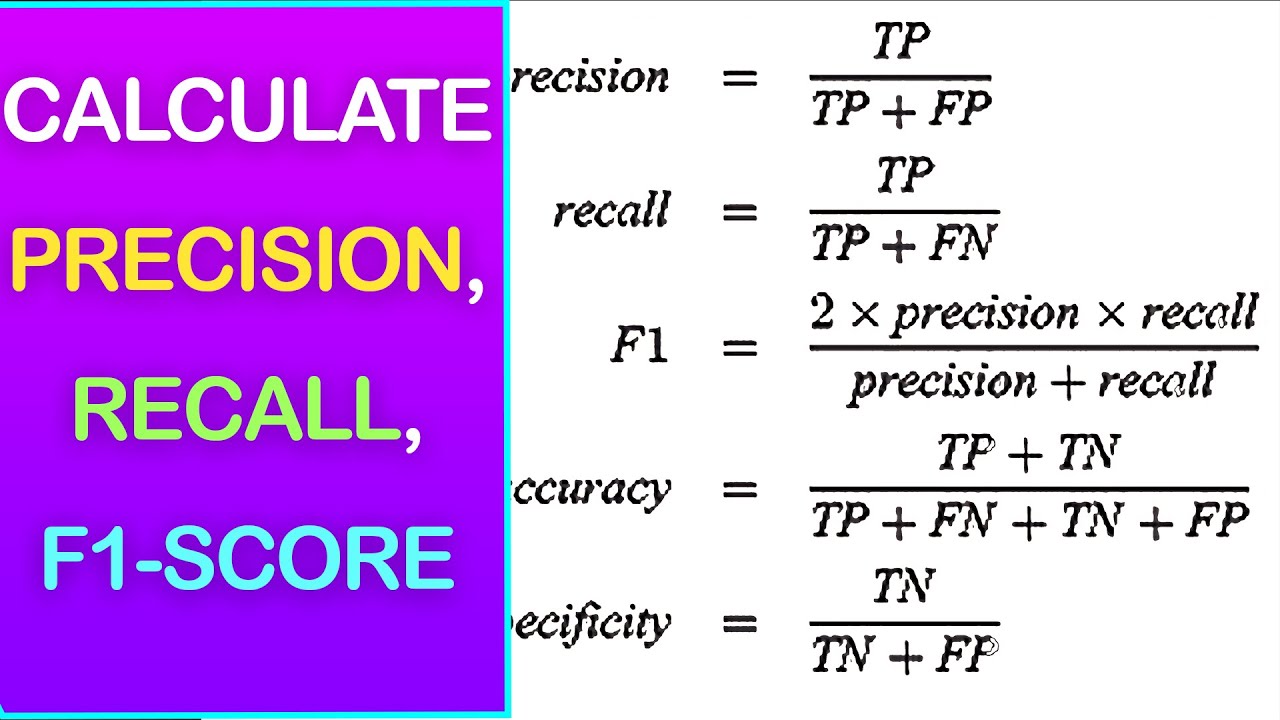

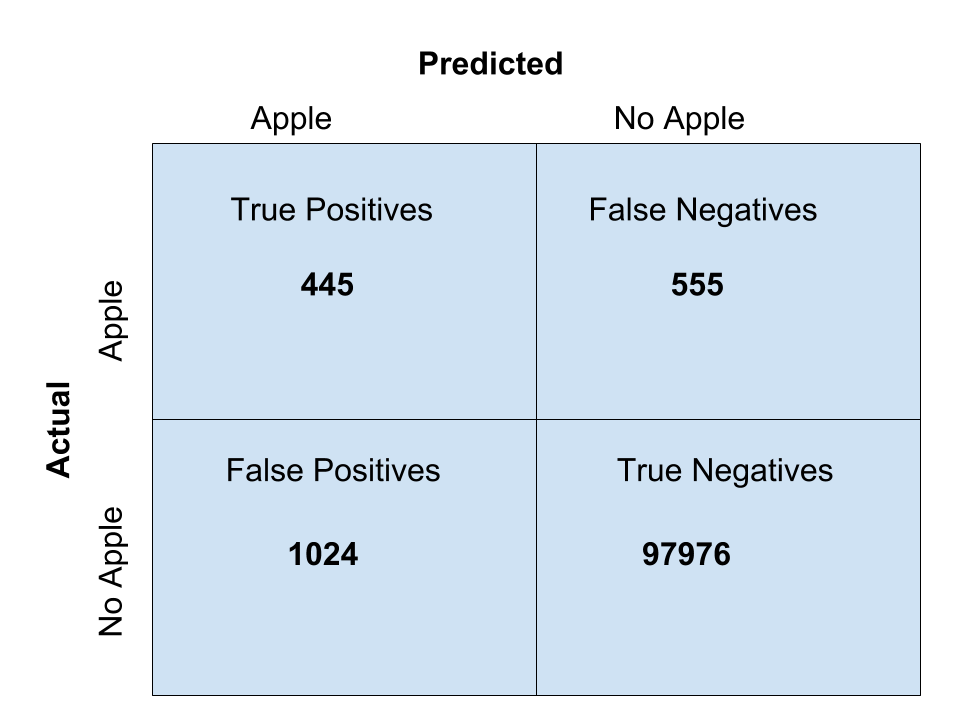

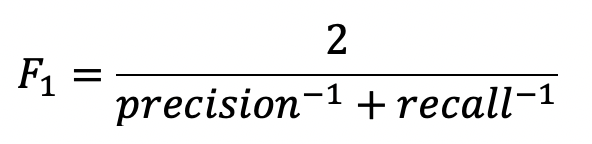

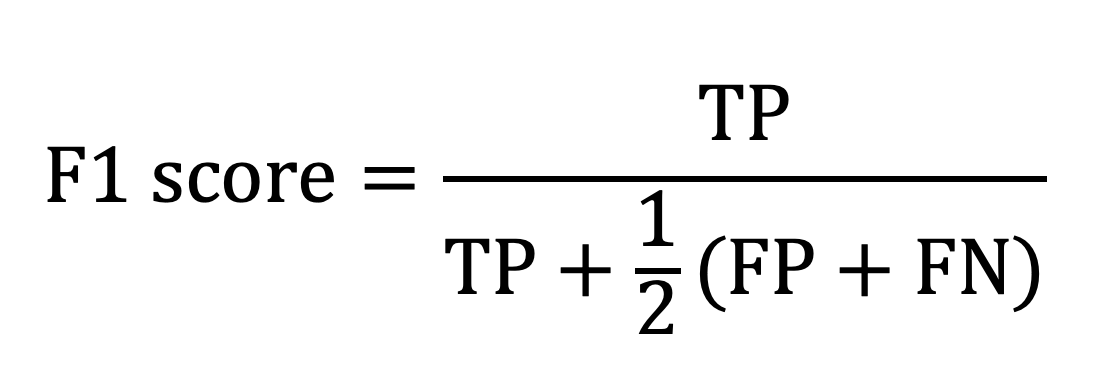

What Is F1 Score F1 score F1 Score is the weighted average of Precision and Recall Therefore this score takes both false positives and false negatives into account Intuitively it is not as easy to understand as accuracy but F1 is usually more useful than accuracy especially if you have an uneven class distribution

As you can see from the plot consider the X axis and Y axis as precision and recall and the Z axis as the F1 Score So from the plot of the harmonic mean both the precision and recall should contribute evenly for the F1 score to rise up unlike the Arithmetic mean This is for the arithmetic mean This is for the Harmonic mean High AUC ROC vs low f1 or other point metric means that your classifier currently does a bad job however you can find the threshold for which its score is actually pretty decent low AUC ROC and low f1 or other point metric means that your classifier currently does a bad job and even fitting a threshold will not change it

What Is F1 Score

What Is F1 Score

https://i.ytimg.com/vi/9w4HJ0VUy2g/maxresdefault.jpg

Micro Macro Weighted Averages Of F1 Score Clearly Explained By

https://miro.medium.com/max/1400/1*4dlBaTCECU55ezUZoyXq5A.png

How To Calculate The F1 Score In Machine Learning Shiksha Online

https://images.shiksha.com/mediadata/ugcDocuments/images/wordpressImages/2022_12_image-29.jpg

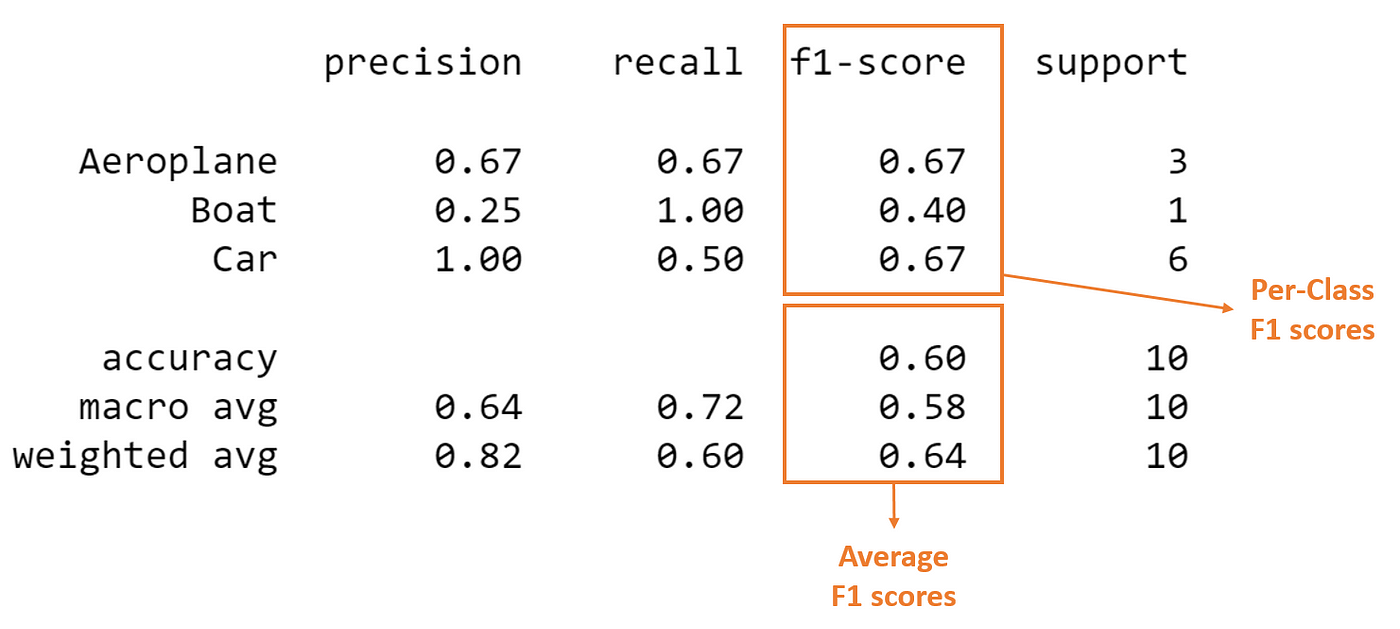

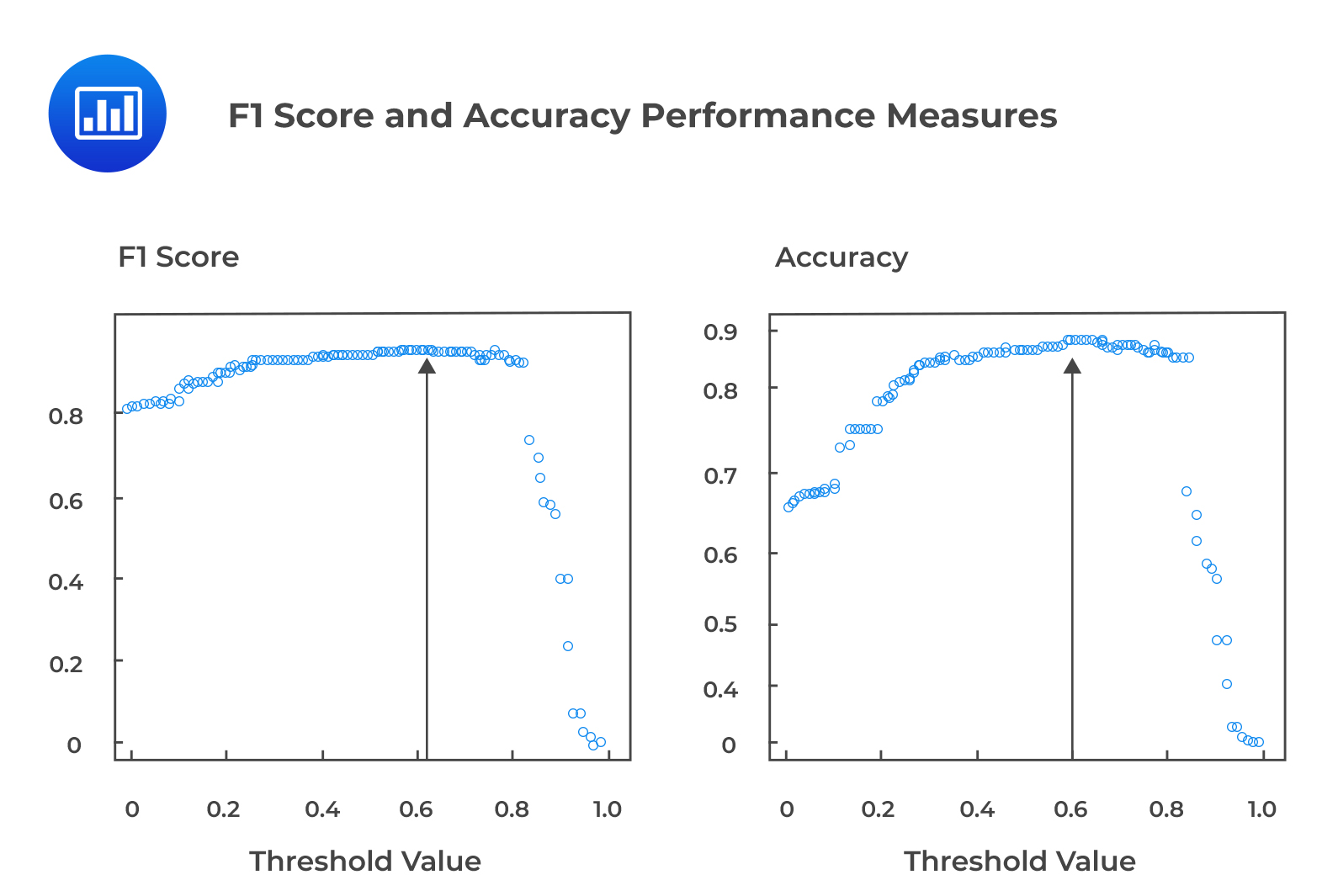

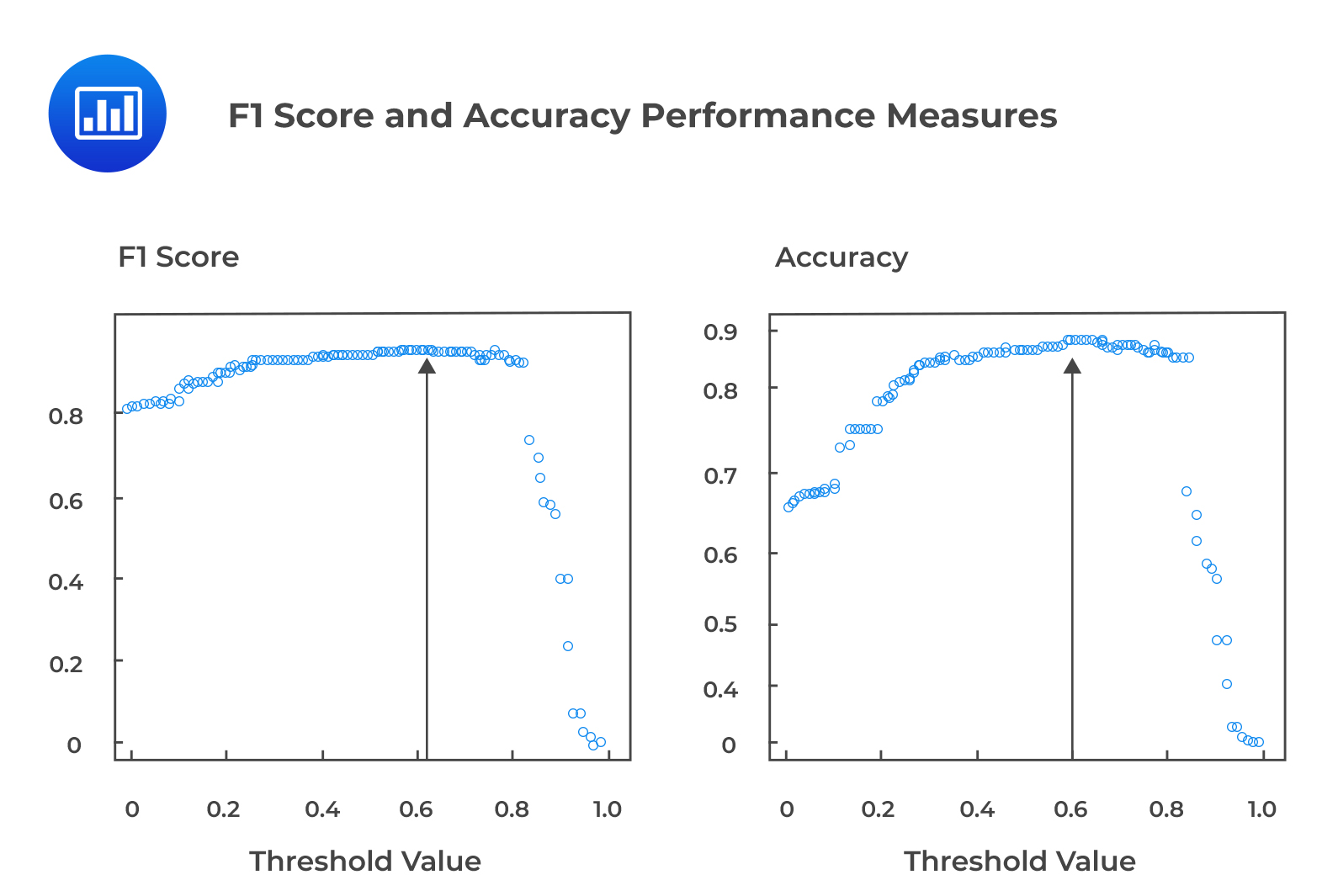

Take the average of the f1 score for each class that s the avg total result above It s also called macro averaging Compute the f1 score using the global count of true positives false negatives etc you sum the number of true positives false negatives for each class Aka micro averaging Compute a weighted average of the f1 score AUROC vs F1 Score Conclusion In general the ROC is for many different levels of thresholds and thus it has many F score values F1 score is applicable for any particular point on the ROC curve You may think of it as a measure of precision and recall at a particular threshold value whereas AUC is the area under the ROC curve

If we predict f1 score using sklearn f1 score metric by setting b 1 0 0 1000 we obtain 0 95 Now I am a little bit confused because all the metrics Accuracy AUC and f1 score are showing high value which means that the model is really good at the prediction task which is If you have imbalanced data and you equally value all classes you MUST use macro F1 When Scikit learn wrote This does not take label imbalance into account they mean that macro F1 will equally value the classes i e won t give the more frequent class more weight which is true because macro F1 does a simple average on the F1s

More picture related to What Is F1 Score

F Is For F1 Score Guide To AI Jaid

https://jaid.io/wp-content/uploads/2023/08/Jaid_A-I-Z_F-is-for-F1-score_1920x1080px_v01.png

What Is F1 Score F1 Score Formula Machine Learning Tutorials

https://i.ytimg.com/vi/nkrH8iDfWdI/maxresdefault.jpg

F1 Score Formula In Confusion Matrix Nedi Tanhya

http://3.bp.blogspot.com/-i2PPzW-r-BA/V8a7Q0Fz6rI/AAAAAAAABIc/AgvKGoTFPGgRYl1bb1QMTjdRyOLRUBfqwCK4B/s1600/F1%2BScore.png

In this case you can use sklearn s f1 score but you can use your own if you prefer from sklearn metrics import f1 score make scorer f1 make scorer f1 score average macro Once you have made your scorer you can plug it directly inside the grid creation as scoring parameter The data suggests we have not missed any true positives and have not predicted any false negatives recall score equals 1 However we have predicted one false positive in the second observation that lead to precision score equal 0 93 As both precision score and recall score are not zero with weighted parameter f1 score thus exists

[desc-10] [desc-11]

F1 Score In Machine Learning Intro Calculation

https://cdn.prod.website-files.com/5d7b77b063a9066d83e1209c/639c3d0f93545970016d3dc7_f-beta-eqn.webp

What Is F1 Score In Machine Learning C3 AI Glossary Definition

https://c3.ai/wp-content/uploads/2020/10/Screen-Shot-2020-10-15-at-4.35.10-PM.png

https://stackoverflow.com › questions

F1 score F1 Score is the weighted average of Precision and Recall Therefore this score takes both false positives and false negatives into account Intuitively it is not as easy to understand as accuracy but F1 is usually more useful than accuracy especially if you have an uneven class distribution

https://stackoverflow.com › questions

As you can see from the plot consider the X axis and Y axis as precision and recall and the Z axis as the F1 Score So from the plot of the harmonic mean both the precision and recall should contribute evenly for the F1 score to rise up unlike the Arithmetic mean This is for the arithmetic mean This is for the Harmonic mean

Sensitivity Precision And F1 Score Calculations Sensitivity

F1 Score In Machine Learning Intro Calculation

Choosing Performance Metrics Accuracy Recall Precision F1 Score

Graph For Accuracy Precision Recall And F1 Score For Different

Sensibilidad Y Especificidad ContenidoyAplicaci n Al Estudio De Cribado

F1 Score 2024 Ailey Arlinda

F1 Score 2024 Ailey Arlinda

What Is F1 score In Machine Learning Precision Recall And F1 Score

Accuracy Confusion Matrix Precision Recall F1 ROC AUC

What Is F1 Score AIML

What Is F1 Score - AUROC vs F1 Score Conclusion In general the ROC is for many different levels of thresholds and thus it has many F score values F1 score is applicable for any particular point on the ROC curve You may think of it as a measure of precision and recall at a particular threshold value whereas AUC is the area under the ROC curve