F1 Score Meaning The question is about the meaning of the average parameter in sklearn metrics f1 score As you can see from the code average micro says the function to compute f1 by considering total true positives false negatives and false positives no matter of the prediction for each label in the dataset

Returns f1 score float or array of float shape n unique labels F1 score of the positive class in binary classification or weighted average of the F1 scores of each class for the multiclass task Each value is a F1 score for that particular class so each class can be predicted with a different score Regarding what is the best score The closest intuitive meaning of the f1 score is being perceived as the mean of the recall and the precision Let s clear it for you In a classification task you may be planning to build a classifier with high precision AND recall For example a classifier that tells if

F1 Score Meaning

F1 Score Meaning

https://images.prismic.io/encord/703be18a-dd25-4306-a7b5-e3873d4a25ce_F1+Blog+Banner.png?auto=compress%2Cformat&fit=max

F1 Score In Machine Learning

https://serokell.io/files/0o/opengraph.0oqo40gg.normal-A_Guide_to_F1_Score.png

Evaluation Of IoU Threshold Impact On F1 score Meaning Model Detection

https://www.researchgate.net/publication/365603160/figure/fig2/AS:11431281098421327@1668916534938/Evaluation-of-IoU-threshold-impact-on-F1-score-meaning-model-detection-ability-and.png

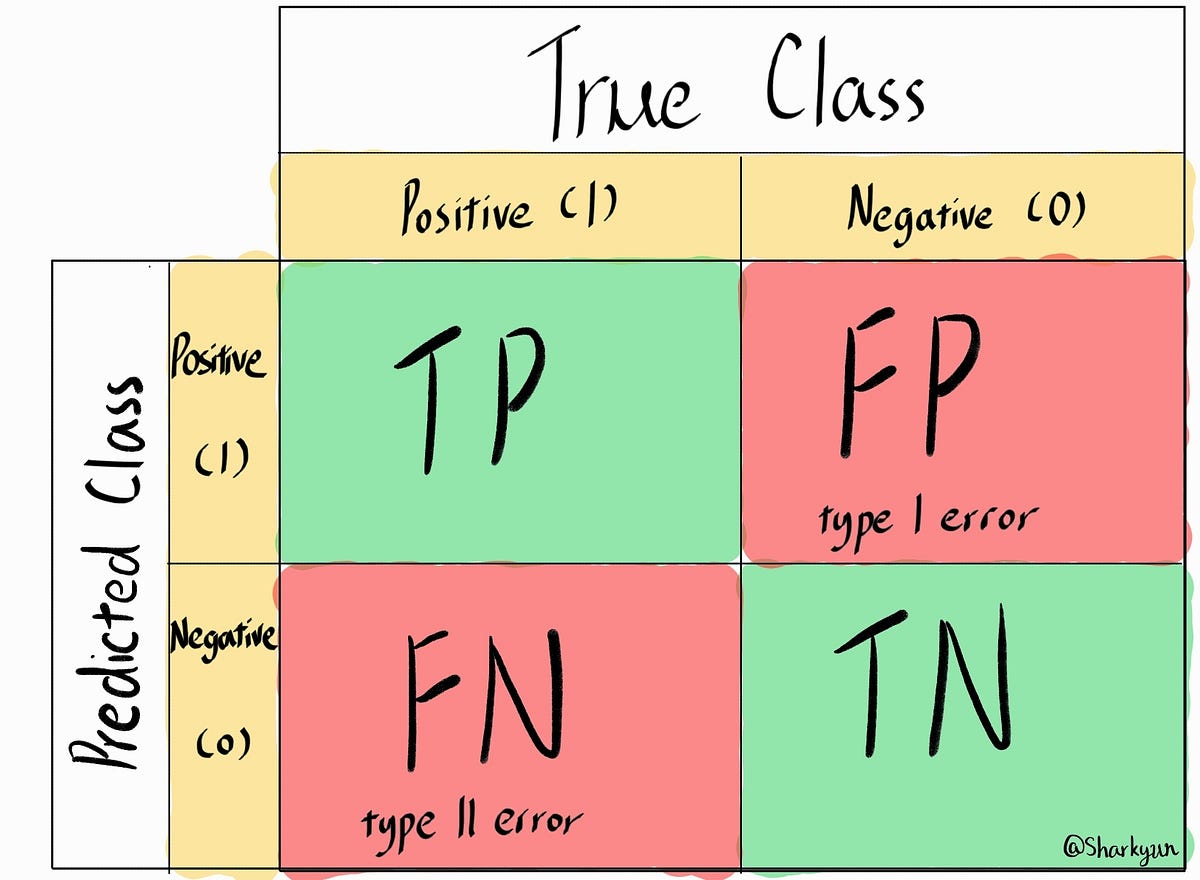

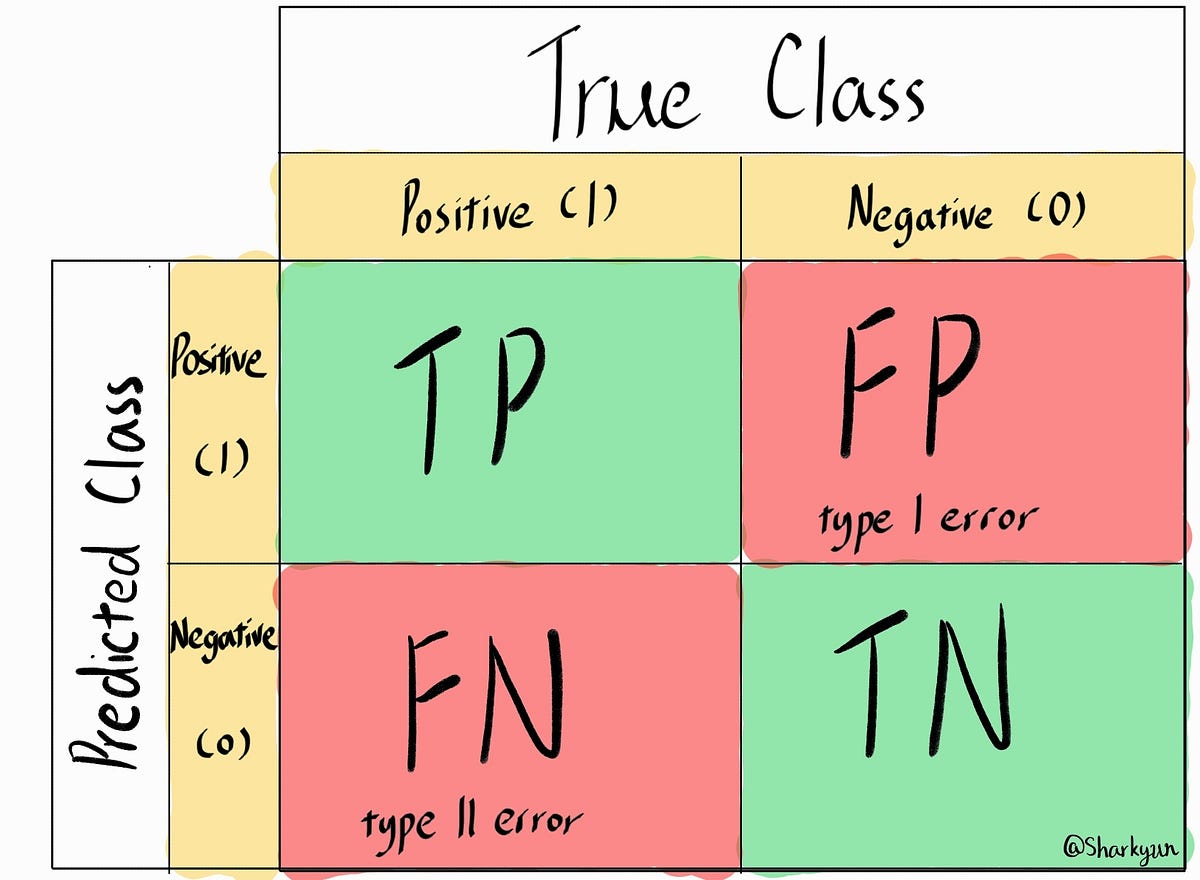

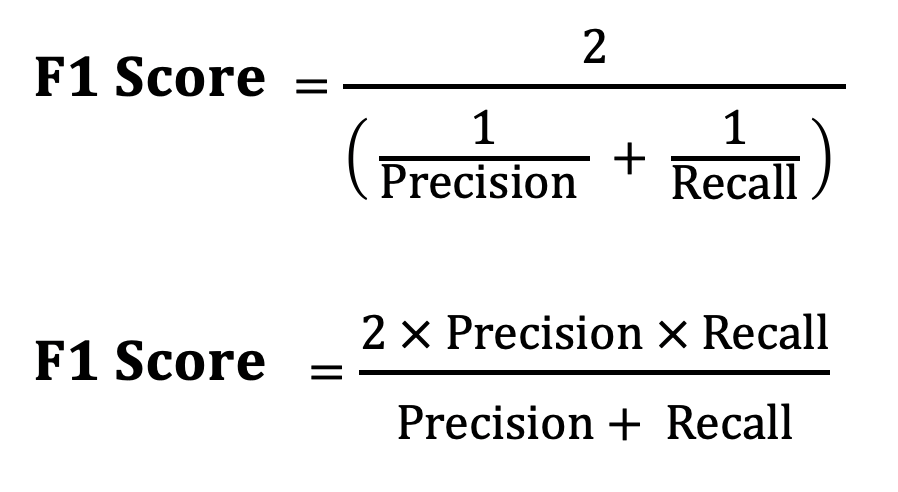

As you can see from the plot consider the X axis and Y axis as precision and recall and the Z axis as the F1 Score So from the plot of the harmonic mean both the precision and recall should contribute evenly for the F1 score to rise up unlike the Arithmetic mean This is for the arithmetic mean This is for the Harmonic mean The f1 score is the harmonic mean of precision and recall As such you need to compute precision and recall to compute the f1 score Both these measures are computed in reference to true positives positive instances assigned a positive label false positives negative instances assigned a positive label etc

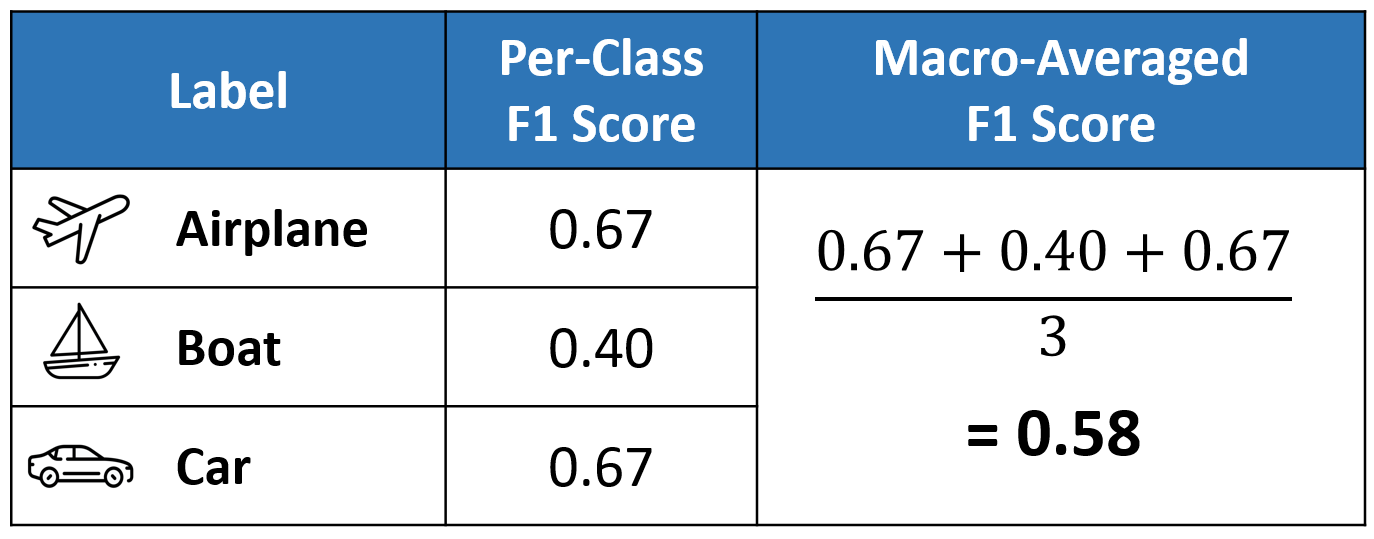

Take the average of the f1 score for each class that s the avg total result above It s also called macro averaging Compute the f1 score using the global count of true positives false negatives etc you sum the number of true positives false negatives for each class Aka micro averaging Compute a weighted average of the f1 score Under such situation using F1 score could be a better metric And F1 score is a common choice for information retrieval problem and popular in industry settings Here is an well explained example Building ML models is hard Deploying them in

More picture related to F1 Score Meaning

F1 Score In Machine Learning YouTube

https://i.ytimg.com/vi/Z9NZY3ej9yY/maxresdefault.jpg

Micro Macro Weighted Averages Of F1 Score Clearly Explained By

https://miro.medium.com/max/1400/1*vLFtzVb2MaFcs2cECuTO5Q.png

What Is Accuracy Precision Recall And F1 Score

https://uploads-ssl.webflow.com/5fed49be52334588b156311c/637620699b7ed5792691ce73_blog_thumb-p-800.png

The f1 score gives you the harmonic mean of precision and recall The scores corresponding to every class will tell you the accuracy of the classifier in classifying the data points in that particular class compared to all other classes The support is the number of samples of the true response that lie in that class I know f1 score which uses precision and recall But what is mean in mean f1 score When we use it and how to calculate mean EDIT for explicitly explain my problem I know the f1 score is the harmonic mean of precision and recall And when we calculate f1 score multiple classification result are needed to calculate precision and recall

[desc-10] [desc-11]

What Is Confusion Matrix Accuracy Precision Recall And F1 Score

https://miro.medium.com/v2/resize:fit:1200/1*yshTLPeqgL9Nm5vTfGpMFQ.jpeg

F Is For F1 Score Guide To AI Jaid

https://jaid.io/wp-content/uploads/2023/08/Jaid_A-I-Z_F-is-for-F1-score_1920x1080px_v01.png

https://stackoverflow.com/questions/55740220

The question is about the meaning of the average parameter in sklearn metrics f1 score As you can see from the code average micro says the function to compute f1 by considering total true positives false negatives and false positives no matter of the prediction for each label in the dataset

https://stackoverflow.com/questions/41277915

Returns f1 score float or array of float shape n unique labels F1 score of the positive class in binary classification or weighted average of the F1 scores of each class for the multiclass task Each value is a F1 score for that particular class so each class can be predicted with a different score Regarding what is the best score

Machine Learning Comparing Probability Threshold Graphs For F1 Score

What Is Confusion Matrix Accuracy Precision Recall And F1 Score

Understanding The F1 Score When It Comes To Evaluating The By Ellie

F1 Score In Machine Learning

Choosing Between F1 Score And Matthews Correlation Coefficient MCC

Understanding And Applying F1 Score AI Evaluation Essentials With

Understanding And Applying F1 Score AI Evaluation Essentials With

N in N et F1 osakilpailun Belgian GP Ilmaiseksi Suomessa Ja Ulkomailla

(2500 × 1406px).png)

Understanding The F1 Score In Machine Learning The Harmonic Mean Of

Precision Recall And F1 Score When Accuracy Betrays You Proclus

F1 Score Meaning - [desc-14]